Again Mavic 3 Enterprise (M3E) will be the main topic today. Previously, Mavic 3 Enterprise (M3E) had shown the capability of mapping through our test. However, those are basic mapping data such as topography and orthography. It has been mentioned that the M3E is capable of 3D mapping, but never tested it. Thus, today's activity will be 3D mapping.

Though the testing should be just checking on 3D mapping capability of M3E, the team wishes to bring a challenger for comparison. Thus, Matrice 300 (M300) with payload of Zenmuse L1 is brought into the competition.

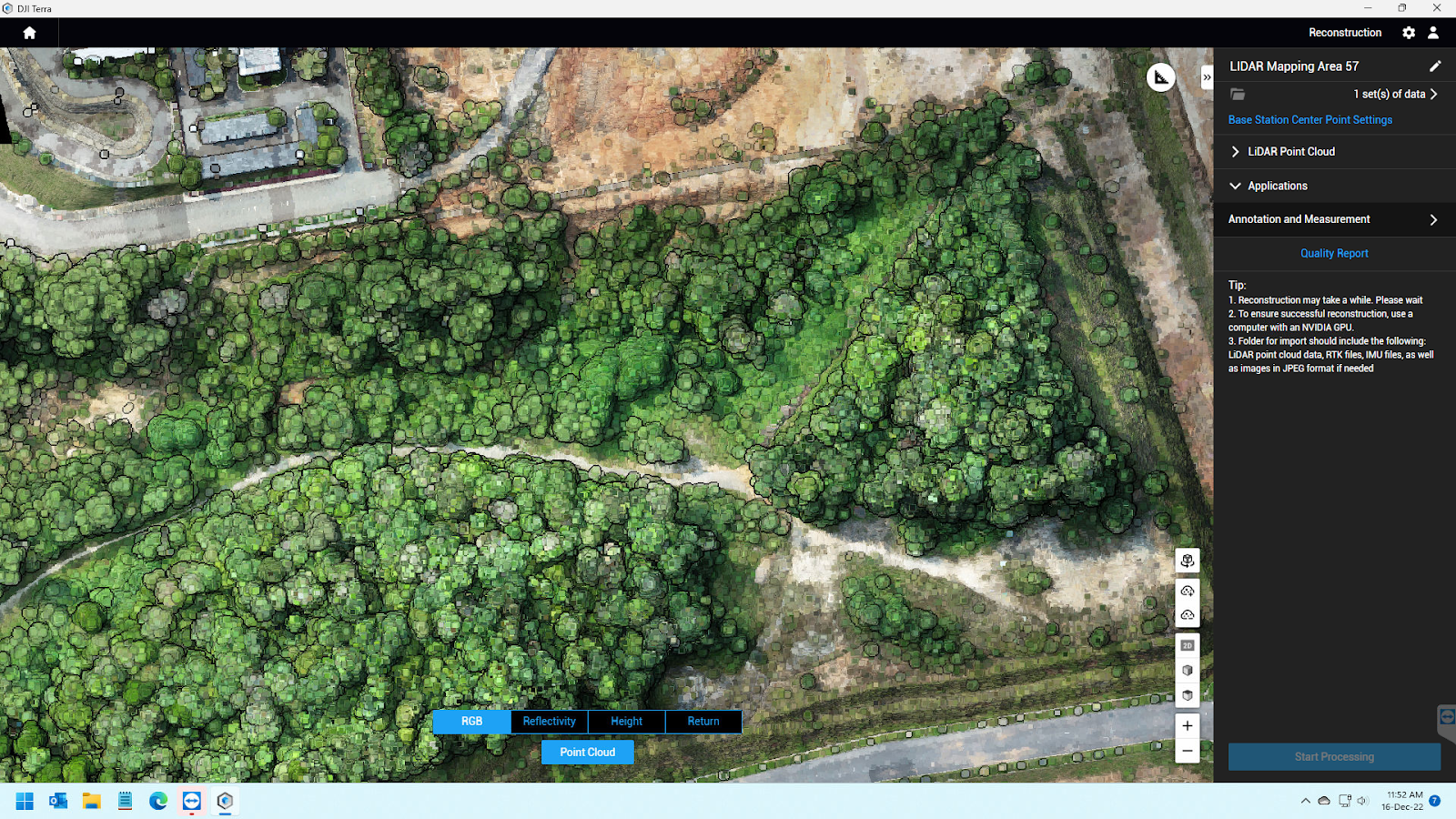

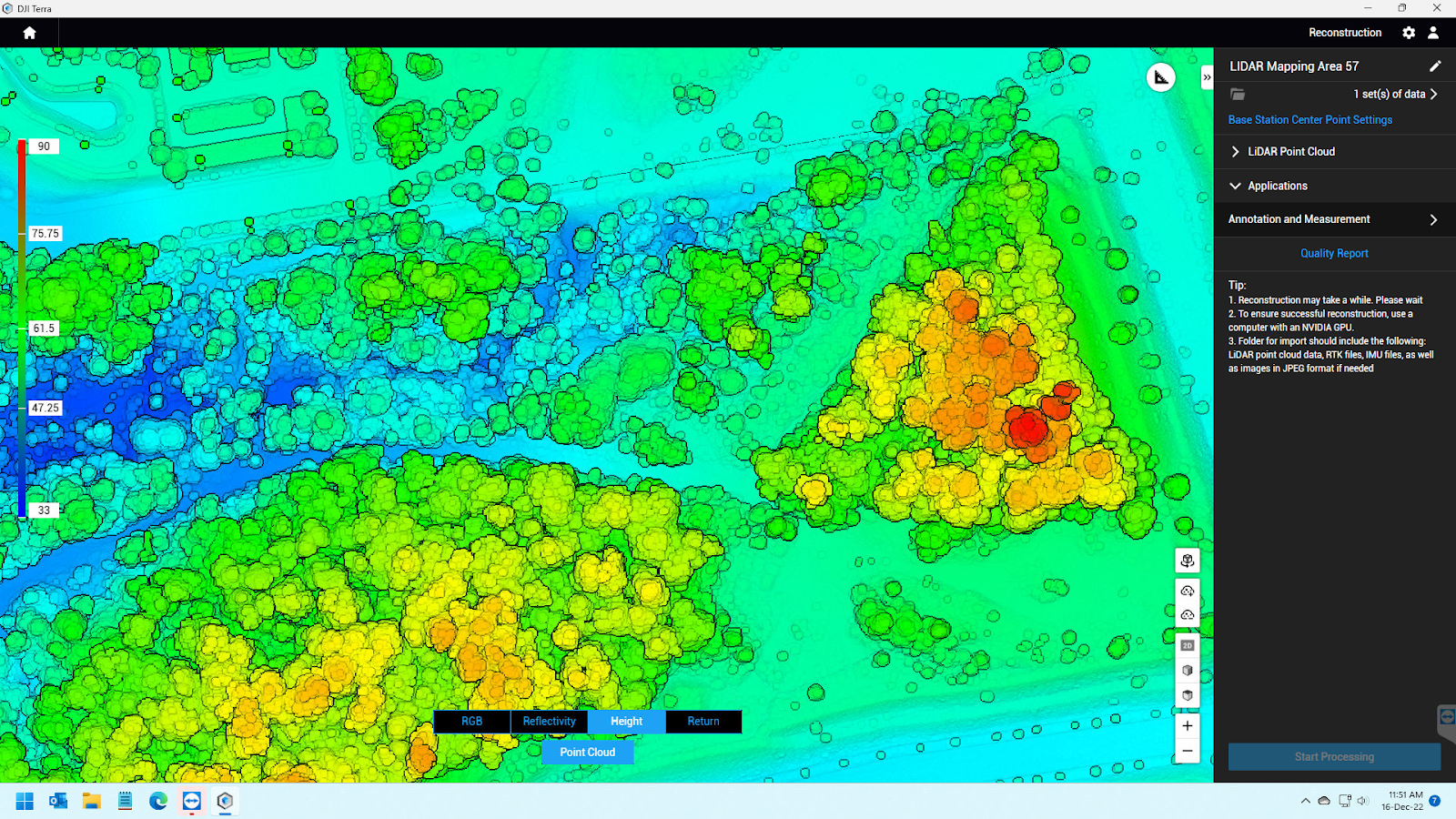

It’s been many times informed about the M3E specification. Let’s check on the M300 with Zenmuse L1 detail. L1 consists of 20 MP RGB camera and with a camera sensor known as LiDAR. The idea of LiDAR is to generate a mapping area by creating a massive amount of small dot known as point cloud by using the reflection from sensor to ground and back to the sensor. Generally point clouds are black and white with black being closer to the sensor and white further to the sensor, where it can generate height difference between the area and create a digitized 3D mapping. To added in colour, that’s where RGB cameras come in and create a colourized point cloud or a photo to match point cloud and generate 3D mapping.

Now, discussion of specification is done. It's worth mentioning that drones only work on data acquisition, and mapping generated by using DJI Terra. Now, Let’s go into the test and check the results.

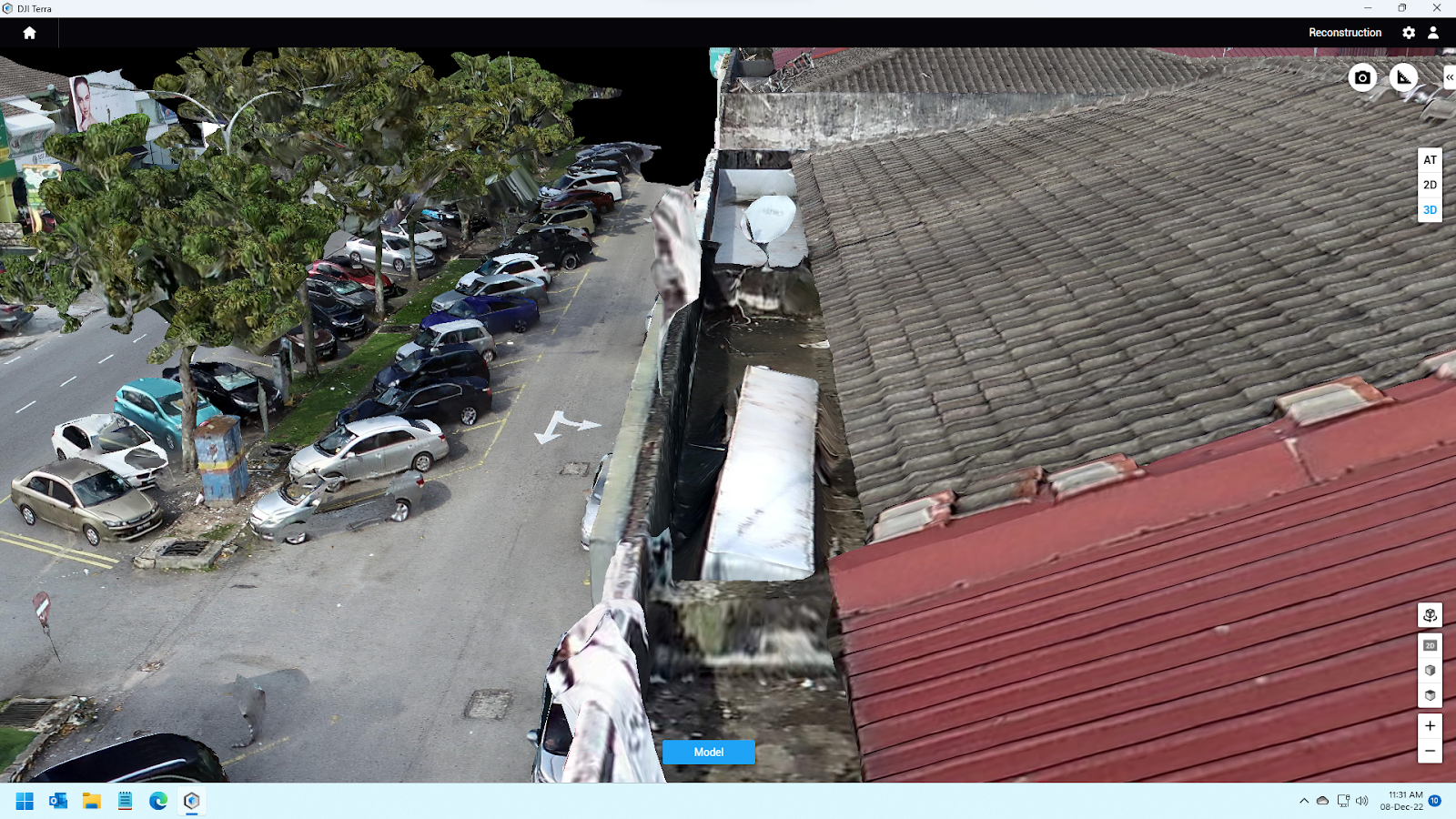

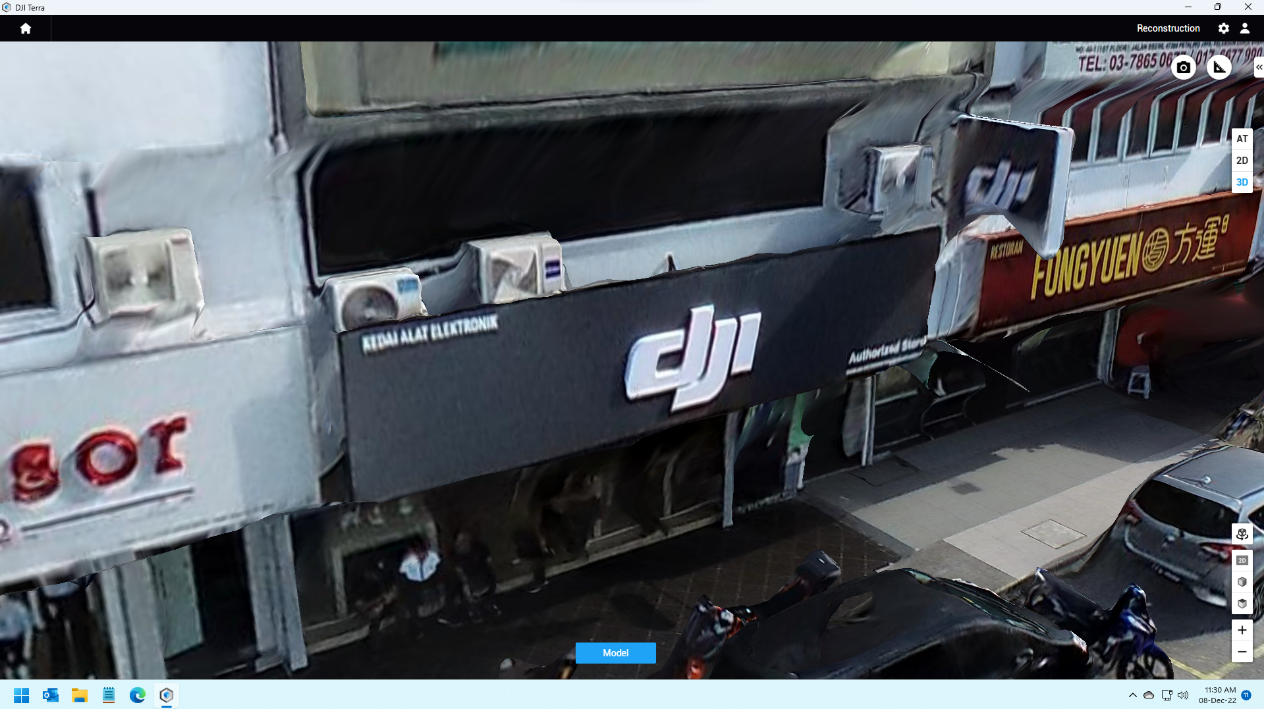

Data above is obtained by M3E. To be honest, I would have believed this is actually photo taking if not through our team. The result is astonishing. There is barely any distortion in the data. The detail is sharp and accurate. Only the edge or small part is distorted such as aircond compression fans or corners of the ceiling. The way M3E creates 3D mapping is by stitching photos that it takes which are similar to normal mapping. The level of completion for 3D mapping is truly remarkable by the M3E way of mapping.

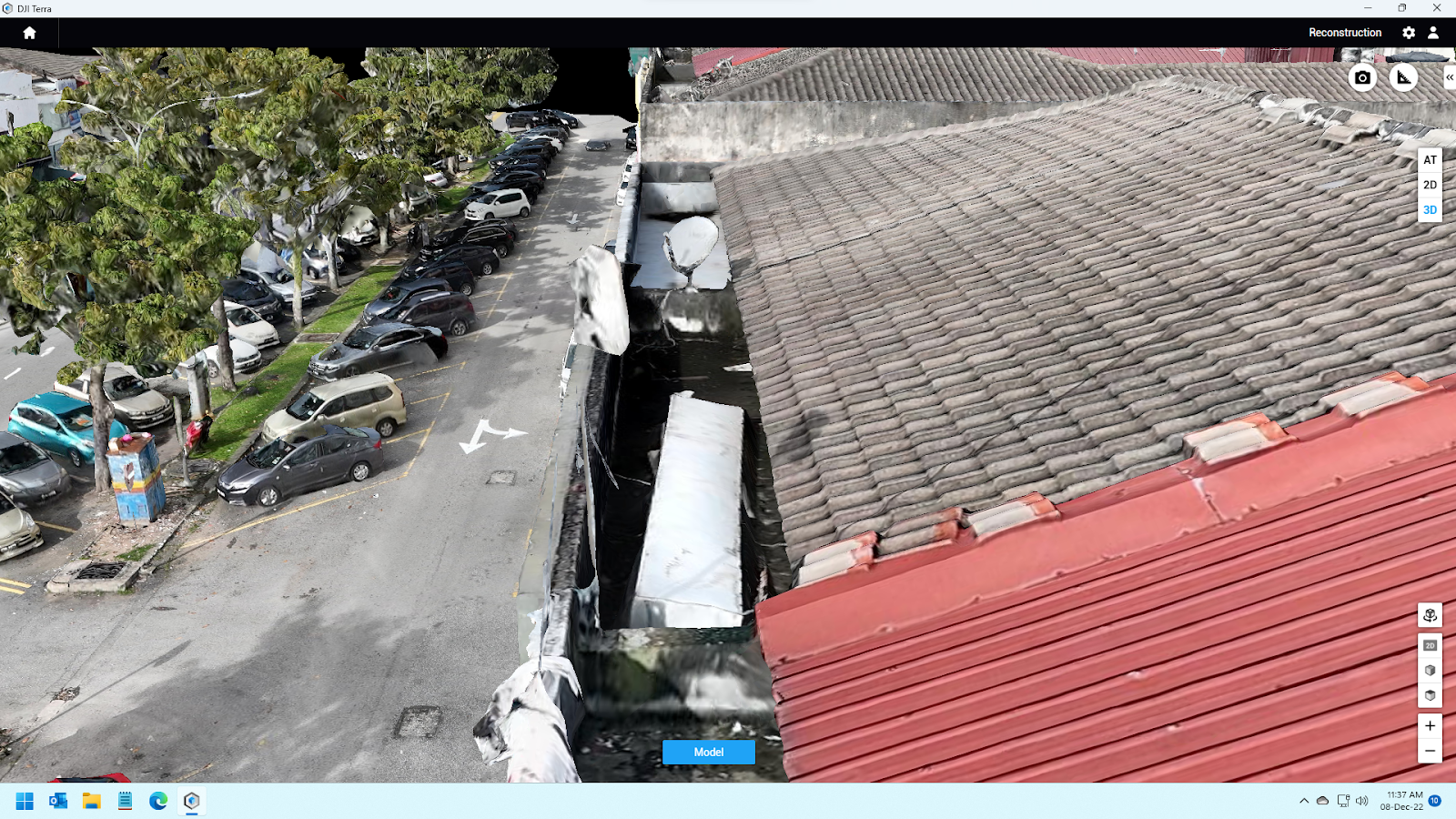

Here the challenger M300 with L1 data. Data is good, however it has more noticeable distortion in the data. Though the positioning and detail is good, it's less accurate or sharp compared to M3E. When the team brings in M300 with L1, it is believed that M300 can do far better in 3D. With the method of 3D mapping, the team would believe M300 and L1 would be done better, especially the detail part. However, the result is truly shocking.

To further check on the result and comparison, the team take out some sample of the data to have a more detailed investigation.

M3E Sample Data M300+L1 Sample Data

As the team goes into details, It would show more distortion in the M300 and L1 such as the business sign and the aircon compressor, roof pattern, the wall divider, etc, but did not happened much in M3E sample data.

Conclusion, it would seem M3E has an overwhelming M300 with L1 in terms of 3D mapping. Another kudos to M3E. DJI really built the M3E well and created one of the best mapping drones so far. However, M300 with L1 is doing a fairly good job in 3D with there is more room to be improved. The test was never to be a competition, but a reference of the level of completion of 3D mapping through aerial mapping.

After conclusion, the team is actually feeling bad and decided to clarify more for M300 with L1. LiDAR data is based on point cloud data so it will be more suitable to be used to obtain ground elevation data because point clouds are able to penetrate vegetation areas so users can get much more accurate ground elevation. Photo above showing a screenshot result of ground elevation and mapping using LiDAR sensor. The best way to get a nice sharp 3D model result is to focus on a single feature only and then fly the drone slowly and at the same time take as much data and photos from every angle. With this 3D model that is being produced will be clearer.

Thus, this is another form of 3D mapping that is not possible done by M3E. M3E is doing better in object 3D mapping, while M300 with L1 is capable of generating 3D mapping for undevelopment areas. In the end, it is really up to what output the customer is looking at. With that a solution caters for the problem.